# Study Platform Documentation

## SHiNE-Framework

Welcome to V-SHiNE: Virtual Smart Home with iNtelligent and Explainability Features!

- [Introduction](https://exmartlab.github.io/SHiNE-Framework/index.md): Welcome to V-SHiNE: Virtual Smart Home with iNtelligent and Explainability Features!

### search

- [Search the documentation](https://exmartlab.github.io/SHiNE-Framework/search.md)

### architecture

The V-SHINE Study Platform is a distributed web application designed for conducting smart home simulation research. The architecture employs real-time communication, modular services, and flexible data storage to support interactive research studies.

- [V-SHINE Platform Architecture](https://exmartlab.github.io/SHiNE-Framework/architecture.md): The V-SHINE Study Platform is a distributed web application designed for conducting smart home simulation research. The architecture employs real-time communication, modular services, and flexible data storage to support interactive research studies.

### game-config

To configure the game, both JSON files game.json and explanation.json is required in the folder platform/src/*.

- [Configuration](https://exmartlab.github.io/SHiNE-Framework/game-config.md): To configure the game, both JSON files game.json and explanation.json is required in the folder platform/src/*.

#### database

Introduction

- [Database](https://exmartlab.github.io/SHiNE-Framework/game-config/database.md): Introduction

#### explanation_engine

Inroduction

- [Explanation Engine](https://exmartlab.github.io/SHiNE-Framework/game-config/explanation_engine.md): Inroduction

#### game_schema

The game.json file defines the full structure of a smart home simulation used in our game-based studies. It coordinates all key components that drive user experience, system behavior, and experimental logic.

- [Game Config Schema](https://exmartlab.github.io/SHiNE-Framework/game-config/game_schema.md): The game.json file defines the full structure of a smart home simulation used in our game-based studies. It coordinates all key components that drive user experience, system behavior, and experimental logic.

- [Devices](https://exmartlab.github.io/SHiNE-Framework/game-config/game_schema/devices.md): Introduction

- [Environment](https://exmartlab.github.io/SHiNE-Framework/game-config/game_schema/environment.md): Temporal Configuration of the Simulation Environment:

- [Interacting with Devices](https://exmartlab.github.io/SHiNE-Framework/game-config/game_schema/interaction_types.md): Introduction

- [Rules](https://exmartlab.github.io/SHiNE-Framework/game-config/game_schema/rules.md): Inroduction:

- [Tasks](https://exmartlab.github.io/SHiNE-Framework/game-config/game_schema/tasks.md): Introduction

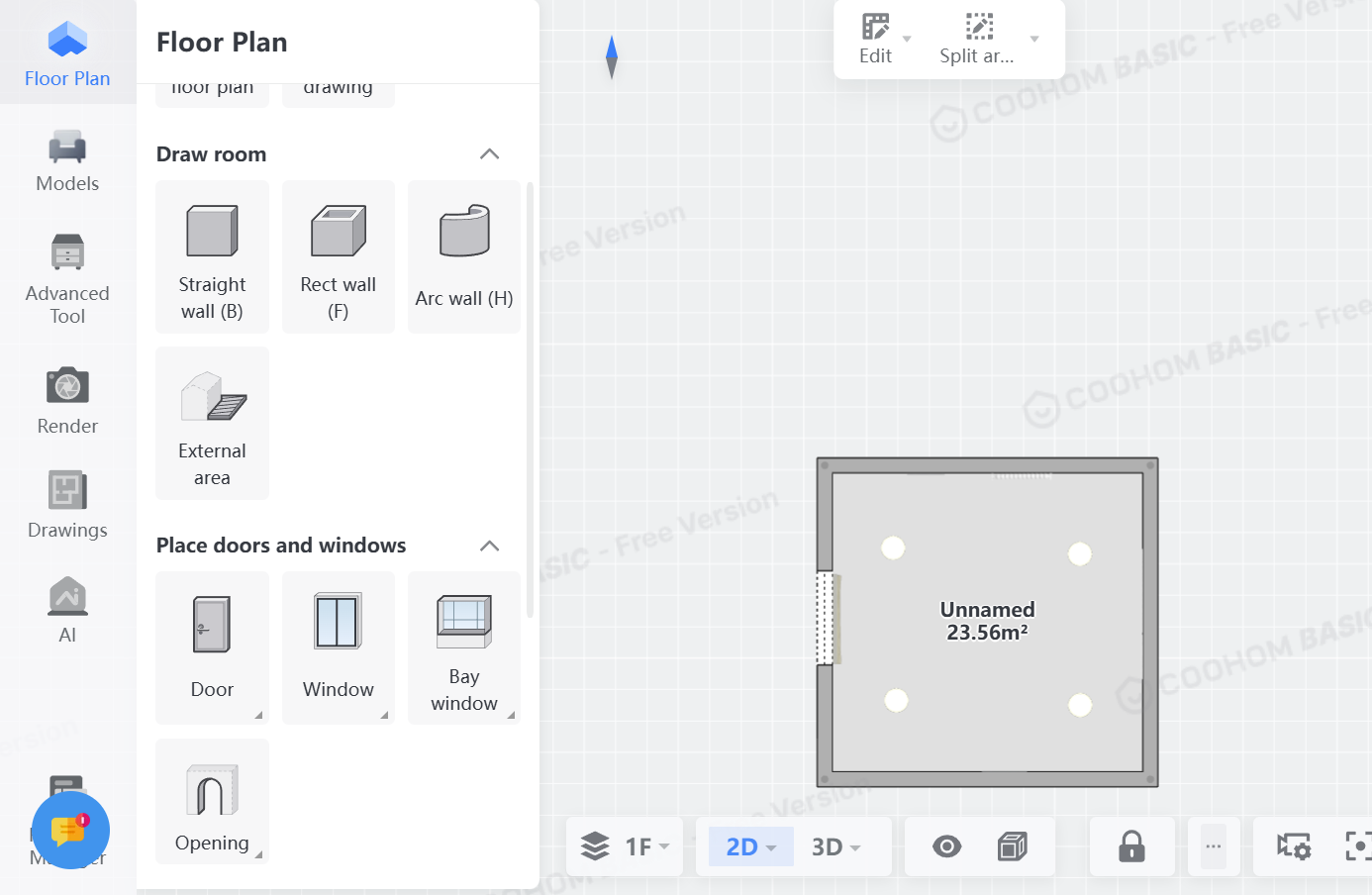

- [Walls](https://exmartlab.github.io/SHiNE-Framework/game-config/game_schema/walls.md): Introduction

### getting-started

This guide helps you understand the platform architecture and create your first smart home study. After completing the Installation, you're ready to build your first interactive smart home scenario.

- [Getting Started](https://exmartlab.github.io/SHiNE-Framework/getting-started.md): This guide helps you understand the platform architecture and create your first smart home study. After completing the Installation, you're ready to build your first interactive smart home scenario.

### installation

This guide covers the installation requirements and setup process for the V-SHINE Study Platform.

- [Installation](https://exmartlab.github.io/SHiNE-Framework/installation.md): This guide covers the installation requirements and setup process for the V-SHINE Study Platform.

### scenario

This section contains example scenarios demonstrating how to configure and implement smart home simulation studies using the V-SHiNE platform.

- [Scenarios](https://exmartlab.github.io/SHiNE-Framework/scenario.md): This section contains example scenarios demonstrating how to configure and implement smart home simulation studies using the V-SHiNE platform.

#### default-scenario

Introduction

- [Default Scenario](https://exmartlab.github.io/SHiNE-Framework/scenario/default-scenario.md): Introduction

#### scenario-2

Introduction

- [CIRCE Scenario](https://exmartlab.github.io/SHiNE-Framework/scenario/scenario-2.md): Introduction

### testing

The V-SHINE Study Platform employs a comprehensive test suite built with Vitest to ensure reliability across all critical components of the smart home simulation platform.

- [Test Suite Documentation](https://exmartlab.github.io/SHiNE-Framework/testing.md): The V-SHINE Study Platform employs a comprehensive test suite built with Vitest to ensure reliability across all critical components of the smart home simulation platform.

---

# Full Documentation Content

[Skip to main content](#__docusaurus_skipToContent_fallback)

[](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/.md)

[**V-SHINE**](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/.md)[Tutorial](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/.md)

[GitHub](https://github.com/ExmartLab/SHiNE-Framework)

Search

# Search the documentation

Docs

* [Tutorial](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/.md)

Copyright © 2025 V-SHINE

---

# V-SHINE Platform Architecture

The V-SHINE Study Platform is a distributed web application designed for conducting smart home simulation research. The architecture employs real-time communication, modular services, and flexible data storage to support interactive research studies.

## System Overview[](#system-overview "Direct link to System Overview")

The platform consists of four main components working together

```

┌─────────────────────────────────────────────────────────────────────────────────┐

│ V-SHINE Study Platform │

├─────────────────────────────────────────────────────────────────────────────────┤

│ │

│ ┌─────────────────────────┐ ┌─────────────────────────────┐ │

│ │ V-SHINE FRONTEND │◄───Socket.IO───►│ V-SHINE BACKEND │ │

│ │ │ │ │ │

│ │ ┌─────────────────┐ │ │ ┌─────────────────────┐ │ │

│ │ │ React Components│ │ WebSocket │ │ Socket Handlers │ │ │

│ │ │ • Study Page │ │ Events: │ │ • Device Interaction│ │ │

│ │ │ • Task Management│ │ • device-int │ │ • Task Management │ │ │

│ │ │ • Explanations │ │ • game-start │ │ • Explanation Req │ │ │

│ │ └─────────────────┘ │ • task-abort │ │ • Game Events │ │ │

│ │ │ │ │ └─────────────────────┘ │ │

│ │ ┌─────────────────┐ │ │ │ │ │

│ │ │ Phaser 3 Game │ │ │ ┌─────────────────────┐ │ │

│ │ │ • GameScene │ │ │ │ Next.js APIs │ │ │

│ │ │ • Device Objects│────┼─────────────────┼──┤ • /api/create-session │ │

│ │ │ • Room Layout │ │ HTTP Requests │ │ • /api/game-data │ │ │

│ │ │ • Smarty │ │ │ │ • /api/verify-session │ │

│ │ └─────────────────┘ │ │ │ • /api/complete-study │ │

│ └─────────────────────────┘ │ └─────────────────────┘ │ │

│ │ │ │ │

│ │ ▼ │ │

│ │ ┌─────────────────────┐ │ │

│ │ │ MongoDB Driver │ │ │

│ │ │ • Connection Pool │ │ │

│ │ │ • Session Mgmt │ │ │

│ │ └─────────────────────┘ │ │

│ └─────────────┬───────────────┘ │

│ │ │

│ ┌─────────────────────────────────────────────────────────┼───────────────┐ │

│ │ MONGODB DATABASE │ │ │

│ │ ▼ │ │

│ │ ┌──────────┐ ┌──────────┐ ┌──────────┐ ┌──────────┐ ┌──────────┐ │ │

│ │ │sessions │ │ tasks │ │ devices │ │expl. │ │ logs │ │ │

│ │ │• metadata│ │• status │ │• states │ │• content │ │• events │ │ │

│ │ │• socketId│ │• timing │ │• values │ │• ratings │ │• timing │ │ │

│ │ └──────────┘ └──────────┘ └──────────┘ └──────────┘ └──────────┘ │ │

│ └─────────────────────────────────────────────────────────────────────────┘ │

│ │

│ ┌─────────────────────────────────────────────────────────────────────────┐ │

│ │ EXTERNAL EXPLANATION ENGINE (Optional) │ │

│ │ │ │

│ │ ┌─────────────────────────┐ ┌─────────────────────────┐ │ │

│ │ │ REST Interface │◄─────────►│ WebSocket Interface │ │ │

│ │ │ │ │ │ │ │

│ │ │ POST /logger │ │ Socket.IO Client │ │ │

│ │ │ POST /explanation │───────────│ • user_log (emit) │ │ │

│ │ │ │ │ • explanation_receival │ │ │

│ │ │ HTTP Request/Response │ │ (listen) │ │ │

│ │ └─────────────────────────┘ │ │ │ │

│ │ ▲ │ Real-time bidirectional │ │ │

│ │ │ └─────────────────────────┘ │ │

│ │ │ ▲ │ │

│ │ └─────────────────────────────────────┘ │ │

│ │ Backend selects interface │ │

│ │ based on explanation_config.json │ │

│ └─────────────────────────────────────────────────────────────────────────┘ │

└─────────────────────────────────────────────────────────────────────────────────┘

```

## Core Components[](#core-components "Direct link to Core Components")

### 🎮 V-SHINE Frontend[](#-v-shine-frontend "Direct link to 🎮 V-SHINE Frontend")

**Technology Stack**: React 19 + Next.js 15 + Phaser 3 + Socket.IO Client + TypeScript

The frontend combines traditional web UI components with a game engine for interactive smart home simulation.

#### React Layer (`/src/app/study/`)[](#react-layer-srcappstudy "Direct link to react-layer-srcappstudy")

* **Study Page**: Main orchestrator managing WebSocket connections and React state

* **Environment Bar**: Task display and progress tracking

* **Smart Home Sidebar**: Device status and control interface

* **Task Abort Modal**: Task management with user feedback

* **Socket Service**: Centralized WebSocket communication manager

#### Phaser 3 Game Engine (`/src/app/study/game/`)[](#phaser-3-game-engine-srcappstudygame "Direct link to phaser-3-game-engine-srcappstudygame")

* **GameScene.ts**: Main coordinator setting up rooms, devices, and Smarty assistant

* **Device.ts**: Interactive device objects with visual states and click handlers

* **Room.ts**: Spatial boundaries and device containers

* **Smarty.ts**: Virtual assistant avatar for guidance

* **EventsCenter.ts**: Bridge between React and Phaser using event emitters

#### Frontend-Backend Communication[](#frontend-backend-communication "Direct link to Frontend-Backend Communication")

```

// Socket.IO Events - Frontend Emits

{

'device-interaction': { sessionId, device, interaction, value },

'game-start': { sessionId },

'task-abort': { sessionId, taskId, reason },

'explanation_request': { sessionId, deviceId },

'explanation_rating': { sessionId, explanationId, rating }

}

// Socket.IO Events - Frontend Listens

{

'update-interaction': { device, interaction, value, source },

'explanation': { content, rating_options, explanationId },

'game-update': { task_completed, next_task, device_updates }

}

```

### 🔧 V-SHINE Backend[](#-v-shine-backend "Direct link to 🔧 V-SHINE Backend")

**Technology Stack**: Next.js 15 + Socket.IO Server + MongoDB Driver + Node.js

The backend provides both HTTP APIs for session management and real-time Socket.IO handlers for game interactions.

#### Next.js API Routes (`/src/app/api/`)[](#nextjs-api-routes-srcappapi "Direct link to nextjs-api-routes-srcappapi")

* **`/api/create-session`**: Initializes new study session with tasks and device states

* **`/api/game-data`**: Returns game configuration merged with current device states

* **`/api/verify-session`**: Validates active sessions and handles timeouts

* **`/api/complete-study`**: Finalizes study data collection and cleanup

#### Socket.IO Event Handlers (`/src/lib/server/socket/`)[](#socketio-event-handlers-srclibserversocket "Direct link to socketio-event-handlers-srclibserversocket")

* **`deviceInteractionHandler.js`**: Core interaction processing with rule evaluation

* **`gameStartHandler.js`**: Session initialization and environment setup

* **`taskAbortHandler.js`**: Task abortion with reasoning collection

* **`taskTimeoutHandler.js`**: Automatic task timeout handling

* **`explanationRequestHandler.js`**: On-demand explanation delivery

* **`explanationRatingHandler.js`**: User feedback collection for explanations

#### Service Layer (`/src/lib/server/services/`)[](#service-layer-srclibserverservices "Direct link to service-layer-srclibserverservices")

* **`commonServices.js`**: Session validation, task management, rule checking

* **`rulesService.js`**: Automated device behavior and cascading updates

* **`deviceUtils.js`**: Device state management and interaction logging

### 🗄️ MongoDB Database[](#️-mongodb-database "Direct link to 🗄️ MongoDB Database")

**Collections and Data Relationships**:

```

sessions Collection

├── sessionId (unique identifier)

├── startTime, lastActivity (timing data)

├── isCompleted, completionTime (status tracking)

├── customData (participant metadata)

├── socketId (real-time connection tracking)

└── explanationCache (performance optimization)

tasks Collection

├── userSessionId (foreign key to sessions)

├── taskId, task_order (task identification)

├── isCompleted, isAborted, isTimedOut (status flags)

├── startTime, endTime (timing measurements)

├── taskDescription (study instructions)

└── abortionReason (user feedback)

devices Collection

├── userSessionId (foreign key to sessions)

├── deviceId (unique device identifier)

└── deviceInteraction[] (array of interaction states)

├── name (interaction property name)

├── type (boolean, numerical, stateless)

├── value (current state value)

└── timestamp (last modification time)

explanations Collection

├── userSessionId (foreign key to sessions)

├── explanationId (unique identifier)

├── content (explanation text)

├── rating (user feedback: like/dislike/none)

├── triggerContext (interaction that caused explanation)

├── timestamp (generation time)

└── metadata (explanation engine details)

logs Collection

├── userSessionId (foreign key to sessions)

├── eventType (device_interaction, task_event, etc.)

├── eventData (structured event information)

├── timestamp (precise event timing)

└── metadata (additional context data)

```

### 🤖 External Explanation Engine (Optional)[](#-external-explanation-engine-optional "Direct link to 🤖 External Explanation Engine (Optional)")

The platform supports integration with external explanation services to provide AI-generated explanations for user interactions. This is an optional component that can be implemented using various technologies and approaches.

#### Integration Approach[](#integration-approach "Direct link to Integration Approach")

The V-SHINE platform provides a **flexible integration layer** that supports different explanation service implementations through standardized interfaces:

**Dual Communication Support**:

* **WebSocket Interface**: Real-time bidirectional communication with asynchronous explanation delivery

* **REST Interface**: HTTP-based request/response with synchronous explanation delivery

* **Configurable Selection**: Interface choice is independent of explanation trigger mode - both support pull, push, and interactive modes

**Explanation Trigger Modes** (supported by both interfaces):

* **Pull Mode**: Explanations are cached and delivered only when user explicitly requests them

* **Push Mode**: Explanations are delivered immediately when triggered by system events

* **Interactive Mode**: Enables immediate explanations plus user message input for custom explanation requests

**Service Requirements**:

* **Input**: Receives user interaction data (device, action, context) and optional user messages

* **Processing**: Generates explanations using AI/ML models, rule-based systems, or other approaches

* **Output**: Returns structured explanation content with optional rating mechanisms

#### Implementation Flexibility[](#implementation-flexibility "Direct link to Implementation Flexibility")

Explanation services can be implemented using any technology stack:

* **AI/ML Services**: Integration with LLMs, expert systems, or custom models

* **Rule-Based Systems**: Template-driven explanations based on interaction patterns

* **Hybrid Approaches**: Combining multiple explanation generation strategies

* **Cloud Services**: Integration with external AI APIs or services

#### Backend Integration[](#backend-integration "Direct link to Backend Integration")

```

// Factory pattern provides unified interface for any explanation service

const explanationEngine = createExplanationEngine(explanationConfig);

// Standard callback interface regardless of implementation

explanationEngine.sendUserLog(interactionData, (explanation) => {

// Process explanation response

socket.emit('explanation', explanation);

});

```

Example Implementation

The repository includes a sample Python Flask explanation engine as a reference implementation, demonstrating both REST and WebSocket communication patterns. This serves as a starting point for developing custom explanation services.

## Data Flow Patterns[](#data-flow-patterns "Direct link to Data Flow Patterns")

### 🔄 Device Interaction Flow[](#-device-interaction-flow "Direct link to 🔄 Device Interaction Flow")

```

User Click (Phaser) → EventsCenter → React State → Socket.IO Client

│

▼

Backend Socket Handler ← MongoDB Update ← Rule Evaluation ← Session Validation

│

▼

Real-time Broadcast → Frontend State Sync → Phaser Visual Update

```

**Detailed Steps**:

1. **User Interaction**: User clicks device in Phaser game

2. **Event Bridge**: EventsCenter forwards to React components

3. **Socket Emission**: React emits `device-interaction` event

4. **Backend Processing**: Socket handler validates session and processes interaction

5. **Rule Evaluation**: Rules engine checks for automated device responses

6. **Database Update**: Device states and interaction logs saved to MongoDB

7. **Real-time Sync**: Updated states broadcast to all connected clients

8. **Frontend Update**: React state and Phaser visuals reflect new device states

### 📝 Explanation Generation Flow[](#-explanation-generation-flow "Direct link to 📝 Explanation Generation Flow")

```

Trigger Event → Backend Handler → Explanation Engine Selection

│

┌─────────────────┴─────────────────┐

▼ ▼

REST Request WebSocket Event

│ │

▼ ▼

HTTP Response ←──── Service ────→ Socket Event

│ │

└─────────────┬─────────────────────┘

▼

Explanation Callback → Database Storage

│

▼

Frontend Toast Display

```

### 📊 Task Management Flow[](#-task-management-flow "Direct link to 📊 Task Management Flow")

```

Task Start Event → Task Begin Logging → Frontend Task Display

│

▼

User Interactions → Goal Checking → Task Completion Detection

│

┌───────────────────────────┴──────────────────────────┐

▼ ▼

Task Completed Task Aborted/Timeout

│ │

▼ ▼

Next Task Setup → Device State Reset → Frontend Update Abort Reason Logging

```

**Detailed Steps**:

1. **Task Initialization**: Game start triggers first task activation

2. **User Progress**: Device interactions checked against task goals

3. **Completion Detection**: Backend validates when task objectives are met

4. **State Transition**: Current task marked complete, next task activated

5. **Device Reset**: Device states updated for new task requirements

6. **Frontend Sync**: Task progress and device states updated in real-time

### 🚀 Session Lifecycle[](#-session-lifecycle "Direct link to 🚀 Session Lifecycle")

```

HTTP: POST /api/create-session → Session + Tasks + Devices Created in MongoDB

│

▼

HTTP: GET /api/game-data → Game Config + Current Device States Merged

│

▼

Frontend: Socket.IO Connection → Real-time Event Handlers Registered

│

▼

Socket: game-start Event → Study Session Begins → Task Timer Starts

│

▼

Real-time Interactions → Device Events → Task Progress → Rule Evaluation

│

▼

HTTP: POST /api/complete-study → Final Data Collection → Session Cleanup

```

**Detailed Steps**:

1. **Session Creation**: API creates session record with associated tasks and initial device states

2. **Configuration Loading**: Frontend requests merged game config with current device states

3. **Socket Connection**: Real-time communication established for interactive gameplay

4. **Study Start**: User begins study, first task activated with timer

5. **Interactive Phase**: Device interactions, explanations, task progression

6. **Study Completion**: Final API call collects completion data and marks session finished

## Architecture Patterns[](#architecture-patterns "Direct link to Architecture Patterns")

### 🎯 Event-Driven Communication[](#-event-driven-communication "Direct link to 🎯 Event-Driven Communication")

* **Socket.IO**: Real-time bidirectional communication between frontend and backend

* **EventsCenter**: Decoupled communication between React and Phaser components

* **Callback Patterns**: Asynchronous explanation engine integration

### ⚙️ Configuration-Driven Design[](#️-configuration-driven-design "Direct link to ⚙️ Configuration-Driven Design")

* **JSON Configuration**: `game.json` and `explanation.json` define study parameters

* **Dynamic State Merging**: Game configuration merged with real-time device states

* **Flexible Rule System**: JSON-defined automated behaviors and device responses

### 🏗️ Modular Service Architecture[](#️-modular-service-architecture "Direct link to 🏗️ Modular Service Architecture")

* **Specialized Handlers**: Dedicated socket handlers for different event types

* **Shared Services**: Common operations abstracted into reusable services

* **Factory Patterns**: Plugin-style explanation engine selection

### 🔄 State Synchronization[](#-state-synchronization "Direct link to 🔄 State Synchronization")

* **Single Source of Truth**: MongoDB serves as authoritative state store

* **Real-time Updates**: Socket.IO ensures frontend reflects backend state changes

* **Session Isolation**: Each user session maintains independent device states

## Security and Performance[](#security-and-performance "Direct link to Security and Performance")

### 🔐 Security Measures[](#-security-measures "Direct link to 🔐 Security Measures")

* **Session Validation**: All socket events validate active sessions

* **Input Sanitization**: User inputs validated against JSON schemas

* **Connection Management**: Socket IDs tracked for secure communication

### ⚡ Performance Optimizations[](#-performance-optimizations "Direct link to ⚡ Performance Optimizations")

* **MongoDB Connection Pooling**: Efficient database connection management

* **Explanation Caching**: Generated explanations cached to avoid regeneration

* **Real-time Optimization**: Socket.IO rooms for efficient event broadcasting

* **Static Asset Caching**: Next.js optimization for game assets and configurations

Architecture Benefits

This architecture provides:

* **Scalability**: Modular design supports multiple concurrent research sessions

* **Flexibility**: Configuration-driven approach allows easy study customization

* **Reliability**: Event-driven patterns with comprehensive error handling

* **Research Focus**: Comprehensive logging and data collection for analysis

---

# Configuration

To configure the game, both JSON files `game.json` and `explanation.json` is required in the folder `platform/src/*`.

Both files configure the game, such as task, environment, room layout, rules and the underlying explanation engine. Currently, both files are present, so they can be modified easily to your needs.

For an overview of the configuration, consider reading the subpages of this section for a detailed explanation of their function.

---

# Database

## Introduction[](#introduction "Direct link to Introduction")

Our framework uses a **MongoDB** database with five collections that are created upon session start and during gameplay logging.

| Collection Name | Description |

| ----------------------------- | -------------------------------------------------------------------------------------- |

| [devices](#devices) | Stores user device variables and their current states per session and device |

| [explanations](#explanations) | Stores generated explanations along with user ratings and interaction metadata |

| [logs](#logs) | Stores detailed logs of user interactions with the system during gameplay |

| [sessions](#sessions) | Stores general session information including identifiers, timestamps, and user context |

| [tasks](#tasks) | Stores user progress and performance on gameplay tasks |

## Devices[](#devices "Direct link to Devices")

The 'devices' collection stores device states and properties for each user in the form of JSON objects. Each object corresponds to a particular device in the smart environment for a specific session.

**Fields**

* **\_id**: Internal MongoDB Object ID.

* **userSessionId**: References the session this device belongs to (sessions.sessionId).

* **deviceId**: Unique ID of the device, matches the id field in game.json.

* **deviceInteraction**: Array of objects describing the current interaction state (see [Interacting with Devices](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/interaction_types.md)) of the device.

Each element in deviceInteraction includes:

* name: Name of the interaction variable (e.g., “Power”, “Temperature”)

* type: Interaction type (e.g., "Boolean\_Action")

* value: Current value of the device parameter

**Example**

```

{

"_id": {

"$oid": "6890802b170e8ab76cd90c50"

},

"userSessionId": "a9297ea7-8f3a-4fcd-abd3-ae3bed267494",

"deviceId": "deep_fryer",

"deviceInteraction": [

{

"name": "Power",

"type": "Boolean_Action",

"value": false

},

{

"name": "Temperature",

"type": "Numerical_Action",

"value": 0

}

]

}

```

* `userSessionId`: User Session ID, references `sessions.sessionId`

* `deviceId`: Device ID, matches device `id` in `game.json`

* `deviceInteraction`: Array of JSON objects for each device interaction, containing the interaction name, type, and current value

## Logs[](#logs "Direct link to Logs")

The logs collection stores all user-generated actions and system events during gameplay. Each entry records a single event along with a timestamp, session reference, and context-specific metadata.

**Fields**

* **\_id**: Internal MongoDB Object ID.

* **type**: Type of the logged event (see [Log Types](#log-types) below).

* **metadata**: Event-specific metadata (e.g., task ID, rule ID, device ID).

* **timestamp**: Unix timestamp of when the event occurred.

* **userSessionId**: References the session in which the event occurred (sessions.sessionId).

**Example**

```

{

"_id": {

"$oid": "6890802e170e8ab76cd90c55"

},

"type": "TASK_BEGIN",

"metadata": {

"task_id": "deep_fryer"

},

"timestamp": 1754300462,

"userSessionId": "a9297ea7-8f3a-4fcd-abd3-ae3bed267494"

}

```

* `type`: Log type, see [Log Types](#log-types) section below

* `metadata`: Metadata for relevant log types, such as task ID

* `timestamp`: Unix timestamp of log creation

* `userSessionId`: User Session ID, references `sessions.sessionId`

### Log Types[](#log-types "Direct link to Log Types")

Each log entry’s type field corresponds to one of the following predefined event types, handled by the platform’s Logger class.

| Log Type | Description | Metadata Fields |

| ----------------------------------------------- | -------------------------------------------------------------- | -------------------------------------- |

| [RULE\_TRIGGER](#rule_trigger) | Triggered when a smart home automation rule activates | `rule_id`, `rule_action` |

| [TASK\_BEGIN](#task_begin) | User starts a new task | `task_id` |

| [TASK\_COMPLETED](#task_completed) | User successfully completes a task | `task_id` |

| [TASK\_TIMEOUT](#task_timeout) | Task duration exceeded the allowed time limit | `task_id` |

| [ABORT\_TASK](#abort_task) | Task was aborted manually or by the system | `task_id`, `abort_reason` |

| [DEVICE\_INTERACTION](#device_interaction) | User interacts with a device (e.g., toggling, adjusting value) | Device-specific metadata |

| [WALL\_SWITCH](#wall_switch) | User switches view to a different wall within the same room | `room`, `wall` |

| [ENTER\_DEVICE\_CLOSEUP](#enter_device_closeup) | User enters close-up view of a device | `device` |

| [EXIT\_DEVICE\_CLOSEUP](#exit_device_closeup) | User exits close-up view of a device | `device` |

| [ROOM\_SWITCH](#room_switch) | User moves from one room to another | `destination_room`, `destination_wall` |

#### RULE\_TRIGGER[](#rule_trigger "Direct link to RULE_TRIGGER")

Logged when automated rules are triggered in the smart home environment.

Metadata:

* `rule_id`: ID of the triggered rule

* `rule_action`: Action performed by the rule

#### TASK\_BEGIN[](#task_begin "Direct link to TASK_BEGIN")

Logged when a user starts a new task.

Metadata:

* `task_id`: ID of the task being started

#### TASK\_COMPLETED[](#task_completed "Direct link to TASK_COMPLETED")

Logged when a user successfully completes a task.

Metadata:

* `task_id`: ID of the completed task

#### TASK\_TIMEOUT[](#task_timeout "Direct link to TASK_TIMEOUT")

Logged when a task exceeds its time limit.

Metadata:

* `task_id`: ID of the task that timed out

#### ABORT\_TASK[](#abort_task "Direct link to ABORT_TASK")

Logged when a user or system aborts a task.

Metadata:

* `task_id`: ID of the aborted task

* `abort_reason`: Reason for task abortion

#### DEVICE\_INTERACTION[](#device_interaction "Direct link to DEVICE_INTERACTION")

Logged when a user interacts with smart home devices.

Metadata:

* Device-specific interaction data (varies by device type and interaction)

#### WALL\_SWITCH[](#wall_switch "Direct link to WALL_SWITCH")

Logged when user switches between walls in a room.

Metadata:

* `room`: Name of the room

* `wall`: Wall identifier being switched to

#### ENTER\_DEVICE\_CLOSEUP[](#enter_device_closeup "Direct link to ENTER_DEVICE_CLOSEUP")

Logged when user enters device closeup/interaction mode.

Metadata:

* `device`: Device identifier being accessed

#### EXIT\_DEVICE\_CLOSEUP[](#exit_device_closeup "Direct link to EXIT_DEVICE_CLOSEUP")

Logged when user exits device closeup mode.

Metadata:

* `device`: Device identifier being exited

#### ROOM\_SWITCH[](#room_switch "Direct link to ROOM_SWITCH")

Logged when user navigates between different rooms via doors.

Metadata:

* `destination_room`: Target room being navigated to

* `destination_wall`: Target wall within the destination room

## Sessions[](#sessions "Direct link to Sessions")

The 'sessions' collection stores general metadata about a user’s study session, including timing, client settings, custom parameters, and socket connections.

**Fields**

* **\_id**: Internal MongoDB Object ID.

* **sessionId**: Unique identifier for the session.

* **startTime**: Timestamp marking when the session began.

* **lastActivity**: Timestamp of the most recent interaction in the session.

* **userAgent**: User’s browser and system information string.

* **screenSize**: Screen dimensions used by the participant.

* width: Screen width in pixels

* height: Screen height in pixels

* **isCompleted**: Boolean indicating whether the session was successfully completed.

* **completionTime**: Timestamp of session completion (if applicable).

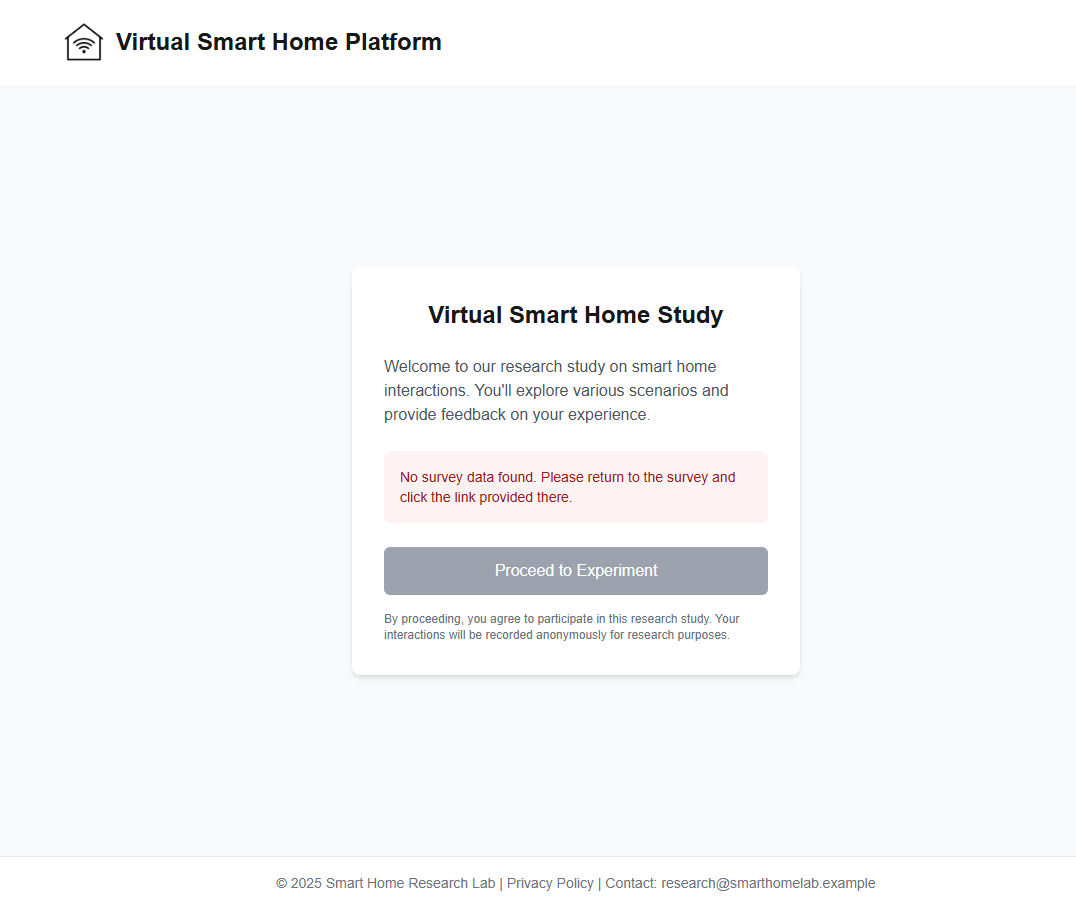

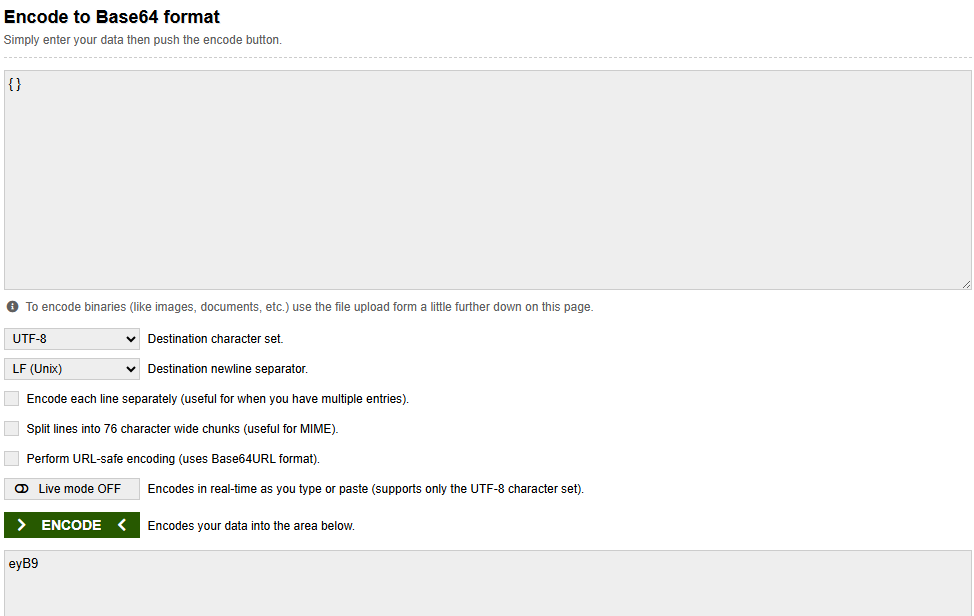

* **customData**: User-specific metadata, passed through the URL as base64-encoded data when launching the study. Includes fields like:

* Condition: Experimental condition assigned

* Technical\_Interest: Self-reported interest in technology

* User\_Name: Name or label of participant (if provided)

* **explanation\_cache**: Cached explanation object, used only in on-demand explanation mode.

* **socketId**: Latest Socket.io connection ID for real-time communication.

**Example**

```

{

"_id": {

"$oid": "6890802b170e8ab76cd90c4c"

},

"sessionId": "a9297ea7-8f3a-4fcd-abd3-ae3bed267494",

"startTime": {

"$date": "2025-08-04T09:40:59.417Z"

},

"lastActivity": {

"$date": "2025-08-04T09:40:59.417Z"

},

"userAgent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/138.0.0.0 Safari/537.36",

"screenSize": {

"width": 1280,

"height": 665

},

"isCompleted": true,

"completionTime": {

"$date": "2025-08-04T09:44:09.273Z"

},

"customData": {

"Condition": 2,

"Technical_Interest": "interested",

"User_Name": "John"

},

"explanation_cache": null,

"socketId": "ODKC-GRRXqG6JI_CAAAD"

}

```

## Tasks[](#tasks "Direct link to Tasks")

The 'tasks' collection stores information about each user’s progress for individual tasks in a gameplay session. Similar to devices, there may be multiple entries per user session—one per task.

**Fields**

* **\_id**: Internal MongoDB Object ID.

* **userSessionId**: References the session during which the task was executed (sessions.sessionId).

* **taskId**: Unique task identifier, matches id field in game.json.

* **task\_order**: Order in which the task appears in the gameplay sequence (starting from 0).

* **taskDescription**: Human-readable task prompt shown to the user.

* **isCompleted**: true if the user completed the task successfully.

* **isAborted**: true if the task was aborted (e.g., by user or system).

* **isTimedOut**: true if the task exceeded its time limit.

* **completionTime**: Timestamp of successful completion (if any).

* **abortedReason**: Explanation for abortion (if any).

* **startTime**: Timestamp of when the task began.

* **endTime**: Timestamp of when the task ended (regardless of outcome).

* **interactionTimes**: Number of user interactions during the task.

* **duration**: Time taken to complete or exit the task, in seconds.

**Example**

```

{

"_id": {

"$oid": "6890802b170e8ab76cd90c4d"

},

"userSessionId": "a9297ea7-8f3a-4fcd-abd3-ae3bed267494",

"taskId": "deep_fryer",

"task_order": 0,

"taskDescription": "Turn on the deep fryer",

"isCompleted": false,

"isAborted": false,

"isTimedOut": true,

"completionTime": null,

"abortedReason": null,

"startTime": {

"$date": "2025-08-04T09:40:59.420Z"

},

"endTime": {

"$date": "2025-08-04T09:43:00.414Z"

},

"interactionTimes": 5,

"duration": 120.994

}

```

## Explanations[](#explanations "Direct link to Explanations")

The `explanations` collection stores all explanations presented to users during gameplay. Each entry includes the explanation content, timing, session context, task context, and user feedback (if provided).

**Fields**

* **\_id**: Internal MongoDB Object ID.

* **explanation\_id**: UUID uniquely identifying the explanation event.

* **explanation**: Text content of the explanation shown to the user.

* **created\_at**: Timestamp of when the explanation was generated or displayed.

* **userSessionId**: References the session during which the explanation was triggered (sessions.sessionId).

* **taskId**: ID of the task associated with the explanation (if applicable).

* **delay**: Delay (in seconds) between triggering and showing the explanation, defined by the associated rule.

* **rating**: Object containing user feedback:

* is\_liked: Boolean indicating whether the user liked the explanation. This field is added only after the user interacts with the feedback UI. **Example**

```

{

"_id": {

"$oid": "68a441d567cee9ab1e3d0f8d"

},

"explanation_id": "13a674b5-b762-4650-8101-2fbe7e5d08cd",

"explanation": "Since the cooker hood is not turned on, the deep fryer cannot be turned on.",

"created_at": {

"$date": "2025-08-19T09:20:21.854Z"

},

"userSessionId": "06711ce4-a2af-4e62-9026-17231df6a985",

"taskId": "deep_fryer",

"delay": 0,

"rating": {

"is_liked": true

}

}

```

---

# Explanation Engine

## Inroduction[](#inroduction "Direct link to Inroduction")

Understanding how intelligent systems make decisions is critical in building trustworthy, usable, and transparent smart environments. In recent years, this has led to growing interest in **explainable systems**, systems that not only act autonomously but also provide human-understandable **reasons** for their behavior.

In smart home environments, users often interact with complex automation rules, adaptive device behaviors, and sensor-triggered events. Without explanations, such interactions can lead to confusion, misunderstanding, or loss of control.

To address this, **explanations** serve as a bridge between **system logic** and **human mental models**. They help users answer questions like:

* *Why did the lamp turn off automatically?*

* *Why can’t I use the oven right now?*

* *What caused the heating to activate?*

In this scope, ehe **Explanation Engine** is the component that generates contextual explanations and feedback based on participants’ interactions with *devices* and *automation rules* within the smart home environment. These explanations are designed to enhance system transparency, improve usability, and support user understanding during task execution.

Our framework enables seamless integration and simulation of the Explanation Engine within a **virtual smart environment**, making it possible to study a wide range of aspects related to **explanation** and **explainability in intelligent systems**.

By using this setup, researchers can conduct both **quantitative** and **qualitative analyses** to assess:

* The **effectiveness** of explanations in supporting user performance and comprehension,

* The **differences between various explanation types**, such as:

* *Causal explanations* (why something happened),

* *Counterfactual explanations* (what would have happened if…),

* *Contrastive explanations* (why this instead of that),

* The impact of different **explanation provision strategies**, such as:

* *Proactive/automated* explanations (delivered by the system automatically),

* *On-demand/user-triggered* explanations (delivered upon request).

This flexibility supports fine-grained experimental design and controlled studies on **Explainable Smart Environments** and **Computer-Human Interaction**.

**Integration Options** You can integrate the Explanation Engine in one of two ways:

1. **Integrated Engine (Default)**

Use the built-in explanation logic that is tightly coupled with the simulation environment. This engine automatically monitors interactions and provides the pre-defined explanations based on the defined rules and states.

2. **Custom API Endpoint**

Connect the system to an external or custom explanation API. This approach is ideal if you want to use your own backend logic, machine learning model, or dynamic explanation strategy.

* The system sends relevant data (e.g., device states, rule matches, user actions) to your API.

* The API returns an explanation string or object to be rendered in the UI.

## JSON Schema[](#json-schema "Direct link to JSON Schema")

The configuration of the **Explanation Engine** is managed through a separate file, `explanation.json`, and is intended to provide modular control over how and when explanations are issued, and which explanation system is activated.

Loading ....

JSON Schema Code

### Explanation Engine Schema

```

{

"$schema": "http://json-schema.org/draft-07/schema#",

"title": "Explanation Engine Configuration",

"description": "Configuration schema for the explanation engine system",

"type": "object",

"properties": {

"explanation_trigger": {

"type": "string",

"enum": [

"pull",

"push",

"interactive"

],

"description": "Defines when explanations are triggered. 'pull' caches explanations until user requests via button click. 'push' shows explanations immediately when conditions are met. 'interactive' enables immediate explanations plus user message input for external explanation engine."

},

"explanation_engine": {

"type": "string",

"enum": [

"integrated",

"external"

],

"description": "Type of explanation engine to use. 'integrated' for simple rule-based explanations, 'external' for complex custom logic."

},

"external_explanation_engine": {

"type": "object",

"description": "Configuration for external explanation engine. Contains engine type and API endpoint.",

"properties": {

"external_engine_type": {

"type": "string",

"enum": [

"rest",

"ws"

],

"description": "Communication protocol for external explanation engine. 'rest' for REST API, 'ws' for WebSocket."

},

"external_explanation_engine_api": {

"type": "string",

"format": "uri",

"description": "URL of the external explanation engine API endpoint (without trailing slash)."

}

},

"required": [

"external_engine_type",

"external_explanation_engine_api"

],

"additionalProperties": false

},

"integrated_explanation_engine": {

"type": "object",

"description": "Collection of explanation rules for the integrated engine. Key is the explanation ID, value is the explanation text (can include HTML).",

"patternProperties": {

"^[a-zA-Z0-9_]+$": {

"type": "string",

"description": "Explanation text that can include HTML tags for formatting"

}

},

"additionalProperties": false

},

"explanation_rating": {

"type": "string",

"enum": [

"like"

],

"description": "Enable rating system for explanations. Currently only 'like' (thumbs up/down) is supported."

}

},

"required": [

"explanation_trigger",

"explanation_engine"

],

"allOf": [

{

"if": {

"properties": {

"explanation_engine": {

"const": "external"

}

}

},

"then": {

"required": [

"external_explanation_engine"

]

}

},

{

"if": {

"properties": {

"explanation_engine": {

"const": "integrated"

}

}

},

"then": {

"required": [

"integrated_explanation_engine"

]

}

}

],

"additionalProperties": false

}

```

| Property | Type | Description |

| ------------------------------- | -------- | -------------------------------------------------------------------------------------------------- |

| `explanation_trigger` | `string` | Determines when explanations are shown. Options: `"pull"`, `"push"`, or `"interactive"`. |

| `explanation_engine` | `string` | Selects the explanation system. Options: `"integrated"` or `"external"`. |

| `explanation_rating` | `string` | Specifies how users rate explanations. Options: `"like"`. |

| `integrated_explanation_engine` | `object` | Required if `explanation_engine` is `"integrated"`; maps device IDs to static explanation strings. |

**Complete Configuration Example**

```

{

"explanation_trigger": "push",

"explanation_engine": "integrated",

"explanation_rating": "like",

"integrated_explanation_engine": {

"deep_fryer_01": "The deep fryer automatically turns off when the cooker hood is not running. This is a safety feature to prevent smoke buildup.",

"mixer_01": "The mixer is automatically disabled after 22:00 to reduce noise for neighbors.",

"lamp_01": "The lamp automatically turns on when you open a book to provide adequate reading light."

}

}

```

### Property: Explanation Trigger[](#property-explanation-trigger "Direct link to Property: Explanation Trigger")

Controls when and how explanations are delivered to participants:

* **`pull`**: Explanations are cached when rule conditions trigger, but only shown when the user clicks "Explain Me!" button. This allows participants to discover contradictions first before seeking explanations.

* **`push`**: Explanations are shown immediately when rule conditions are met or when the external explanation engine emits an explanation.

* **`interactive`**: Explanations are shown immediately like `push` mode, plus enables a chat input for users to send custom messages to the external explanation engine for interactive explanations.

Choosing Trigger Type

* Use **`pull`** when you want participants to discover issues independently before seeking help.

* Use **`push`** for educational scenarios where immediate feedback is beneficial.

* Use **`interactive`** when you want immediate explanations plus the ability for users to ask follow-up questions via chat.

### Property: Explanation Engine[](#property-explanation-engine "Direct link to Property: Explanation Engine")

Specifies **which explanation system** is used to generate and deliver explanations:

* **`integrated`** Uses the built-in explanation engine defined directly within the simulation. This mode relies on predefined mappings between devices and explanation strings. It’s simple, fast, and fully contained within the simulation environment, ideal for controlled experiments where consistency and low complexity are desired.

* **`external`**: Sends explanation queries to an **external API endpoint**. This allows for integration of custom explanation logic, dynamic generation (e.g., with LLMs), or connection to logging systems. The API should return a structured explanation object.

When using `external`, you must also specify the communication protocol within the `external_explanation_engine` object:

```

{

"explanation_engine": "external",

"external_explanation_engine": {

"external_engine_type": "rest",

"external_explanation_engine_api": "https://your-api.com/explanation"

}

}

```

Choosing an Engine Type

Use **integrated** for straightforward, repeatable studies or offline deployments. Use **external** when your study requires flexible, adaptive, or user-personalized explanations powered by external models or logic.

### Property: Explanation Rating[](#property-explanation-rating "Direct link to Property: Explanation Rating")

Controls whether participants can rate (the usefulness\*\*) of the explanations they receive.

```

{

"explanation_rating": "like"

}

```

**Supported Values**:

* **like**: Enables a simple thumbs up/down feedback mechanism after each explanation is shown. It helps

* Understand how users perceive the relevance and helpfulness of the explanations.

* Identify which explanations are working and which need refinement.

info

Additional rating modes (e.g., 5-star scale, open comments) may be supported in future versions. For now, only "like" or "none" (if omitted) are valid options

## Integrated Explanation Engine[](#integrated-explanation-engine "Direct link to Integrated Explanation Engine")

The integrated explanation engine provides a straightforward approach for rule-based explanations without requiring external infrastructure.

### Creating Explanations[](#creating-explanations "Direct link to Creating Explanations")

Define explanations as key-value pairs where:

* **Key**: Explanation ID referenced in rule actions

* **Value**: Explanation text (supports HTML formatting)

```

{

"integrated_explanation_engine": {

"coffee_machine_01": "The coffee machine turns off automatically after making 5 cups to prevent overheating.",

"security_system_01": "The security system requires all doors to be locked before activation.",

"thermostat_01": "Energy saving mode automatically reduces temperature during unoccupied hours."

}

}

```

### Integration with Rules[](#integration-with-rules "Direct link to Integration with Rules")

Explanation generation can be explicitly bound to rule execution. That is, generating an explanation becomes a deliberate action in the system’s rule set, just like turning off a device or adjusting a temperature.

This design allows researchers to precisely specify:

* When and Under which conditions an explanation is generated

* Which explanation text is associated with the event

To trigger an explanation when a rule is executed, include an *Explanation action* within the rule’s action block. This action references an explanation ID previously defined in the explanation.json.

This approach allows fine-grained control over when explanations are generated based on system logic and environmental context.

This mechanism controls when the explanation is generated and queued, not when it is shown to the participant.

Showing the explanation is governed separately by the explanation\_trigger setting (e.g., pull, push, or interactive).

```

{

"name": "Coffee Machine Auto-Off",

"precondition": [

{

"type": "Device",

"device": "coffee_machine",

"condition": {

"name": "Cups Made",

"operator": ">=",

"value": 5

}

}

],

"action": [

{

"type": "Device_Interaction",

"device": "coffee_machine",

"interaction": {

"name": "Power",

"value": false

}

},

{

"type": "Explanation",

"explanation": "coffee_machine_01"

}

]

}

```

This rule disables the coffee machine after 5 cups and generates the explanation with "coffee\_machine\_01" ID (see last example above) that is to explain the action. For a full description of rule structure, preconditions, and supported action types, see [Rules.mdx](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/rules.md).

## External Explanation Engine[](#external-explanation-engine "Direct link to External Explanation Engine")

An **external explanation engine** allows researchers to implement customized, intelligent, or adaptive explanation strategies that go beyond static rule-based logic. This can include:

* Domain-specific reasoning algorithms

* Machine learning or LLM-based explanation generation

* Integration with external systems, datasets, or user profiles

In this setup, the **explanation generation logic is external** to our framework. The internal mechanics of how explanations are created (e.g., inference models, prompt engineering, heuristics) are not managed or constrained by the framework.

Instead, our framework functions as a middleware that:

1. **Collects runtime context** during the simulation or task execution, including:

* User identity or role (if available)

* User interactions (e.g., device toggles, movement, button clicks)

* Game state and environmental context (e.g., current time, temperature, weather)

2. **Sends this context** as a structured request to a configured external API endpoint

3. **Receives the generated explanation** from the external service

4. **Displays the explanation** in the GUI according to the explanation\_trigger setting

The `external_explanation_engine` object is **required** when the explanation\_engine is set to "external". It contains the configuration for the external explanation engine, including the communication protocol and API endpoint.

**Supported Types**

* **rest**

Communicates with the external explanation engine via **HTTP REST API**. Typically uses endpoints such as:

* POST /logger — to send context logs (optional)

* POST /explanation — to request an explanation based on current context

```

{

"external_explanation_engine": {

"external_engine_type": "rest",

"external_explanation_engine_api": "https://example.com/engine"

}

}

```

* **ws**

Connects via **WebSocket** for real-time, bidirectional communication. Suitable for use cases requiring ongoing dialogue or rapid system-user interaction.

```

{

"external_explanation_engine": {

"external_engine_type": "ws",

"external_explanation_engine_api": "ws://example.com:8080"

}

}

```

### REST API Implementation[](#rest-api-implementation "Direct link to REST API Implementation")

#### Setup Configuration Example[](#setup-configuration-example "Direct link to Setup Configuration Example")

```

{

"explanation_trigger": "push",

"explanation_engine": "external",

"external_explanation_engine": {

"external_engine_type": "rest",

"external_explanation_engine_api": "https://your-domain.com/engine"

},

"explanation_rating": "like"

}

```

> 💡 You can change the explanation\_trigger between "pull", "push", or "interactive" based on your study needs.

#### Required API Endpoints[](#required-api-endpoints "Direct link to Required API Endpoints")

When using a REST-based external explanation engine ("external\_engine\_type": "rest"), your API **must implement the following two endpoints**:

| Endpoint | Method | Purpose | Required? |

| -------------------------------------- | ------ | --------------------------------------------- | --------- |

| 1- [`/logger`](#post-logger) | POST | Send context and logging data | Yes |

| 2- [`/explanation`](#post-explanation) | POST | Request explanation (with or without message) | Yes |

##### POST `/logger`[](#post-logger "Direct link to post-logger")

This endpoint is called **whenever a participant interacts** with the environment or when relevant system events occur. It provides the external engine with rich **contextual information** about the participant's current state, environment, and activity history.

Environment Data Sources

The `environment` array contains data from two sources:

* **Task Environment Variables**: Context defined in the current task's `environment` field (e.g., Weather, Temperature)

* **User Custom Data**: Session variables passed via URL context (e.g., user group, study condition, user type)

For more details on session context and user variables, see [Session Context & User Variables](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/getting-started.md#session-context--user-variables).

**Request Payload Example:**

```

{

"user_id": "64bdb062-cb25-487f-8373-c56ac18fba5a",

"current_task": "make_coffee",

"ingame_time": "08:32",

"environment": [

{ "name": "Weather", "value": "Sunny" },

{ "name": "Temperature", "value": 20 },

{ "name": "group", "value": "control" },

{ "name": "user_type", "value": "novice" }

],

"devices": [

{

"device": "coffee_machine",

"interactions": [

{

"name": "Power",

"value": true

},

{

"name": "Cups Made",

"value": 3

}

]

}

],

"logs": [

{

"type": "DEVICE_INTERACTION",

"device_id": "coffee_machine",

"interaction": {

"name": "Power",

"value": true

},

"timestamp": 1739280520

}

]

}

```

> This endpoint is **passive** and does not return an explanation — it exists to keep the external engine context-aware and updated.

##### POST `/explanation`[](#post-explanation "Direct link to post-explanation")

This endpoint is called **when an explanation is requested**, either **on pull** (e.g., user clicks "Explain Me!") or **on push/interactive** (based on rule triggers and system configuration).

**Standard Request:**

```

{

"user_id": "64bdb062-cb25-487f-8373-c56ac18fba5a"

}

```

**Request with User Message (if explanation\_trigger is "interactive"):**

```

{

"user_id": "64bdb062-cb25-487f-8373-c56ac18fba5a",

"user_message": "Why did the coffee machine suddenly turn off?"

}

```

#### API Response Format[](#api-response-format "Direct link to API Response Format")

The API must respond with a structured JSON object:

**Show Explanation:**

```

{

"success": true,

"show_explanation": true,

"explanation": "The coffee machine automatically turns off after making 5 cups to prevent overheating and ensure optimal coffee quality."

}

```

**No Explanation:**

```

{

"success": true,

"show_explanation": false

}

```

### WebSocket Implementation[](#websocket-implementation "Direct link to WebSocket Implementation")

If you set "external\_engine\_type": "ws", the framework will open a **WebSocket connection** to the configured server and communicate using **event-based messages**. This allows for **real-time explanation exchange**, useful for interactive or dialog-based explainable systems.

#### Setup Configuration Example[](#setup-configuration-example-1 "Direct link to Setup Configuration Example")

```

{

"explanation_trigger": "pull",

"explanation_engine": "external",

"external_explanation_engine": {

"external_engine_type": "ws",

"external_explanation_engine_api": "ws://your-domain.com:8080"

},

}

```

#### WebSocket Events[](#websocket-events "Direct link to WebSocket Events")

All communication is in **JSON format**, sent over the open WebSocket channel.

### WebSocket Event Summary[](#websocket-event-summary "Direct link to WebSocket Event Summary")

| Event Name | Direction | Trigger | Purpose |

| -------------------------------------------------------- | --------- | ----------------------------------------------------------- | --------------------------------------------------------------- |

| [`user_log`](#outgoing-user_log) | Outgoing | On participant action or system event | Sends runtime context (user, device, environment) to the engine |

| [`explanation_request`](#outgoing-explanation_request) | Outgoing | When user clicks "Explain Me!" or rule triggers explanation | Requests an explanation from the external engine |

| [`explanation_receival`](#incoming-explanation_receival) | Incoming | On response from external engine | Receives and displays explanation text in the simulation GUI |

##### Outgoing: `user_log`[](#outgoing-user_log "Direct link to outgoing-user_log")

Sent **whenever participant actions generate logs**, such as device interactions.

```

{

"user_id": "64bdb062-cb25-487f-8373-c56ac18fba5a",

"current_task": "make_coffee",

"ingame_time": "08:32",

"environment": [

{ "name": "Weather", "value": "Sunny" },

{ "name": "group", "value": "control" }

],

"devices": [

{

"device": "coffee_machine",

"interactions": [

{

"name": "Power",

"value": true

}

]

}

],

"logs": {

"type": "DEVICE_INTERACTION",

"device_id": "coffee_machine",

"interaction": {

"name": "Power",

"value": true

},

"timestamp": 1739280520

}

}

```

##### Outgoing: `explanation_request`[](#outgoing-explanation_request "Direct link to outgoing-explanation_request")

Sent when an explanation is requested by the participant, either due to a trigger or user action.

```

{

"user_id": "64bdb062-cb25-487f-8373-c56ac18fba5a",

"timestamp": 1739792380,

"user_message": "Why can't I turn on the deep fryer?"

}

```

##### Incoming: `explanation_receival`[](#incoming-explanation_receival "Direct link to incoming-explanation_receival")

The explanation engine responds with an explanation\_receival message. This is then shown in the user interface.

```

{

"user_id": "64bdb062-cb25-487f-8373-c56ac18fba5a",

"explanation": "The deep fryer requires the cooker hood to be active for safety ventilation.",

}

```

> * **explanation**: The explanation text to display.

## Log Types Reference[](#log-types-reference "Direct link to Log Types Reference")

The **Explanation Engine** receives detailed logs about participant interactions throughout the smart home simulation. These logs help external or integrated engines **reason about user behavior**, **device states**, and **rule activations** to generate relevant explanations.

Understanding these log types is essential when designing:

* Context-aware explanation systems

* User modeling algorithms

* Task performance analysis

### Overview of Log Types[](#overview-of-log-types "Direct link to Overview of Log Types")

| Log Type | Trigger Event | Purpose |

| ----------------------------------------------- | --------------------------------------- | ----------------------------------------------------- |

| [`DEVICE_INTERACTION`](#device_interaction) | User interacts with a device | Tracks changes made by the participant |

| [`RULE_TRIGGER`](#rule_trigger) | Automation rule is triggered | Captures rule activations and their resulting actions |

| [`TASK_BEGIN`](#task_begin) | New task starts | Marks the start of a task session |

| [`TASK_COMPLETED`](#task_completed) | Task successfully completed | Marks the end of a successful task |

| [`TASK_TIMEOUT`](#task_timeout) | Task ends due to time expiration | Captures task failure from timeout |

| [`ROOM_SWITCH`](#room_switch) | User moves to another room | Captures spatial navigation across rooms |

| [`WALL_SWITCH`](#wall_switch) | User looks at another wall in same room | Captures intra-room navigation |

| [`ENTER_DEVICE_CLOSEUP`](#enter_device_closeup) | User enters device close-up view | Tracks focus and engagement with a device |

| [`EXIT_DEVICE_CLOSEUP`](#exit_device_closeup) | User exits close-up view | Returns to wall view from close-up |

| [`ABORT_TASK`](#abort_task) | User explicitly gives up on a task | Captures task abandonment with optional reasoning |

### DEVICE\_INTERACTION[](#device_interaction "Direct link to DEVICE_INTERACTION")

Generated when participants change device settings:

```

{

"type": "DEVICE_INTERACTION",

"metadata": {

"device_id": "deep_fryer",

"interaction": {

"name": "Power",

"value": true

}

},

"timestamp": 1748860205

}

```

### RULE\_TRIGGER[](#rule_trigger "Direct link to RULE_TRIGGER")

Generated when smart home rules activate:

```

{

"type": "RULE_TRIGGER",

"metadata": {

"rule_id": "deep_fryer_rule",

"rule_action": [

{

"device": "deep_fryer",

"property": {

"name": "Power",

"value": false

}

}

]

},

"timestamp": 1748860205

}

```

### TASK\_BEGIN[](#task_begin "Direct link to TASK_BEGIN")

Generated when a new task starts:

```

{

"type": "TASK_BEGIN",

"metadata": {

"task_id": "make_coffee"

},

"timestamp": 1748860190

}

```

### TASK\_COMPLETED[](#task_completed "Direct link to TASK_COMPLETED")

Generated when participants successfully complete all task goals:

```

{

"type": "TASK_COMPLETED",

"metadata": {

"task_id": "make_coffee"

},

"timestamp": 1748862020

}

```

### TASK\_TIMEOUT[](#task_timeout "Direct link to TASK_TIMEOUT")

Generated when a task expires due to time limit before completion:

```

{

"type": "TASK_TIMEOUT",

"metadata": {

"task_id": "make_coffee"

},

"timestamp": 1748862033

}

```

### ROOM\_SWITCH[](#room_switch "Direct link to ROOM_SWITCH")

Generated when participants move between rooms using doors:

```

{

"type": "ROOM_SWITCH",

"metadata": {

"destination_room": "kitchen",

"destination_wall": "wall1"

},

"timestamp": 1748860201

}

```

### WALL\_SWITCH[](#wall_switch "Direct link to WALL_SWITCH")

Generated when participants navigate between walls within the same room:

```

{

"type": "WALL_SWITCH",

"metadata": {

"room": "Shared Room",

"wall": "0"

},

"timestamp": 1748860200

}

```

### ENTER\_DEVICE\_CLOSEUP[](#enter_device_closeup "Direct link to ENTER_DEVICE_CLOSEUP")

Generated when participants click on a device to enter its detailed interaction view:

```

{

"type": "ENTER_DEVICE_CLOSEUP",

"metadata": {

"device": "coffee_machine"

},

"timestamp": 1748860202

}

```

### EXIT\_DEVICE\_CLOSEUP[](#exit_device_closeup "Direct link to EXIT_DEVICE_CLOSEUP")

Generated when participants exit from device closeup view back to wall view:

```

{

"type": "EXIT_DEVICE_CLOSEUP",

"metadata": {

"device": "coffee_machine"

},

"timestamp": 1748860203

}

```

### ABORT\_TASK[](#abort_task "Direct link to ABORT_TASK")

Track when participants abandon tasks:

```

{

"type": "ABORT_TASK",

"metadata": {

"task_id": "make_coffee",

"abort_reason": "I believe this task is impossible."

},

"timestamp": 1748862023

}

```

## Implementation Examples[](#implementation-examples "Direct link to Implementation Examples")

### Study: Impossible Task Detection[](#study-impossible-task-detection "Direct link to Study: Impossible Task Detection")

```

{

"explanation_trigger": "pull",

"explanation_engine": "integrated",

"explanation_rating": "like",

"integrated_explanation_engine": {

"contradiction_01": "This task cannot be completed because the security system prevents the coffee machine from operating during night hours.",

"safety_override_01": "The smoke detector has triggered an automatic shutdown of all kitchen appliances.",

"energy_limit_01": "The home's energy management system has reached its daily limit and disabled non-essential devices."

}

}

```

### Study: AI-Powered Explanations[](#study-ai-powered-explanations "Direct link to Study: AI-Powered Explanations")

```

{

"explanation_trigger": "push",

"explanation_engine": "external",

"external_explanation_engine": {

"external_engine_type": "rest",

"external_explanation_engine_api": "https://ai-explainer.your-lab.edu/api"

},

"explanation_rating": "like"

}

```

---

# Game Config Schema

The `game.json` file defines the full structure of a smart home simulation used in our game-based studies. It coordinates all key components that drive user experience, system behavior, and experimental logic.

This configuration includes:

* The **environment** context (e.g., current time, temprature, weather, etc. within the simulated smart home)

* The **rules** that govern smart home automation

* A set of **tasks** players must complete

* Definitions for **rooms**, **walls**, and **doors**

* Interactive **devices** with properties and states

***

## Game Config[](#game-config "Direct link to Game Config")

Loading ....

JSON Schema Code

### Game Schema

```

{

"type": "object",

"title": "Game Configuration File",

"description": "Configuration File of the game",

"properties": {

"environment": {

"$ref": "environmentSchema.json"

},

"rules": {

"type": "array",

"items": {

"$ref": "ruleSchema.json"

}

},

"tasks": {

"type": "object",

"description": "Tasks configuration with metadata and task list",

"properties": {

"ordered": {

"type": "string",

"description": "Whether tasks must be completed in order",

"enum": [

"true",

"false"

]

},

"timer": {

"type": "number",

"description": "Global timer for all tasks in seconds"

},

"abortable": {

"type": "boolean",

"description": "Whether tasks can be aborted globally"

},

"tasks": {

"type": "array",

"description": "Array of individual tasks",

"items": {

"$ref": "taskSchema.json"

},

"minItems": 1

}

},

"required": [

"tasks"

]

},

"rooms": {

"type": "array",

"items": {

"$ref": "roomSchema.json"

}

}

}

}

```

Each section is defined as a modular schema and can be validated independently. For detailed documentation, refer to the dedicated pages for each section below:

| Section | Description | Details |

| ---------------------- | -------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------------------------ |

| **Environment** | Contextual variables that may influence rules | [Environment Schema](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/environment.md) |

| **Rules** | Automation logic that reacts to environment and devices | [Rules Schema](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/rules.md) |

| **Tasks** | Experimental tasks with goals, device resets, and timers | [Tasks Schema](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/tasks.md) |

| **Rooms & Walls** | Visual and navigational layout of the house | [Walls & Rooms Schema](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/walls.md) |

| **Devices** | Smart home devices with states and properties | [Devices Schema](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/devices.md) |

| **Device Interaction** | Device action/state schemas (numerical, boolean, etc.) | [Interaction Schema](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/interaction_types.md) |

---

# Devices

## Introduction[](#introduction "Direct link to Introduction")

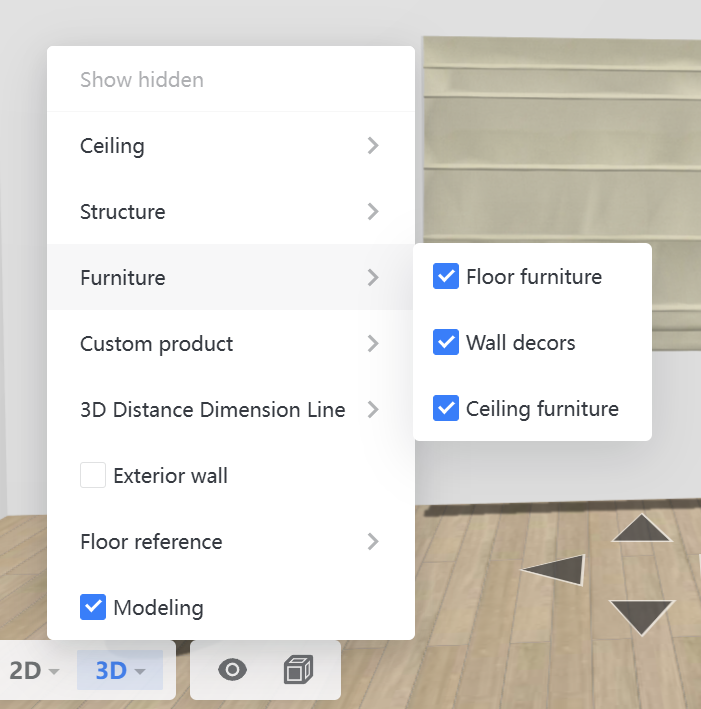

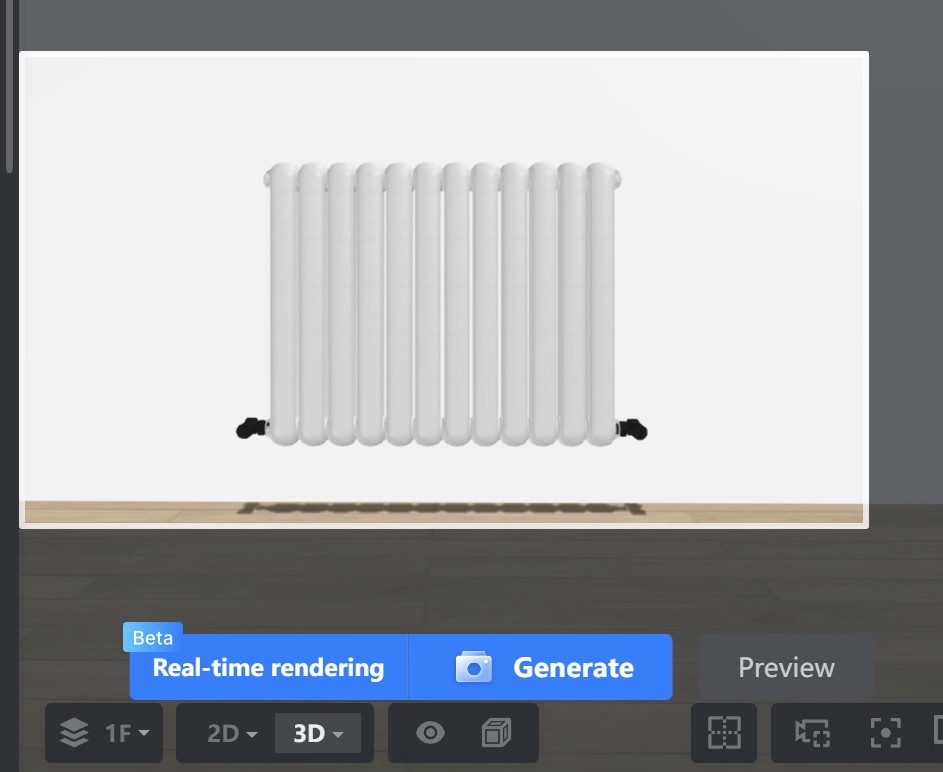

Within the simulation environment, devices represent interactive elements that mimic any smart objects commonly found in domestic or workplace contexts, such as lights, thermostats, smart meters, coffee machines, smart speakers, smart TVs, blinds, and more. Each device is a structured object placed spatially within the 3D simulated environment and is associated with a set of interaction modalities and visual states. Participants can engage with these devices, and their state can be monitored or changed by the rule engine.

Devices are embedded within simulation space by assigning them to specific walls in the environment (see [Wall Configuration](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/walls.md)). While each wall can host multiple devices, each device instance is placed on only one wall. This constraint allows for a coherent mapping of the virtual environment and ensures consistent spatial interactions.

Device Configuration

Always start by defining the basic properties (name, id, position, interactions) before adding complex visual states. This makes it easier to test and debug your device configuration.

## JSON Schema[](#json-schema "Direct link to JSON Schema")

Loading ....

JSON Schema Code

### Device Schema

```

{

"type": "object",

"title": "Device",

"description": "A device in the game",

"properties": {

"name": {

"type": "string",

"description": "Name of the device"

},

"id": {

"type": "string",

"description": "Unique identifier for the device, used for referencing in rules and tasks"

},

"position": {

"$ref": "devicePositionSchema.json"

},

"interactions": {

"type": "array",

"items": {

"oneOf": [

{

"$ref": "booleanActionScheme.json"

},

{

"$ref": "numericalActionScheme.json"

},

{

"$ref": "dynamicPropertyScheme.json"

},

{

"$ref": "genericActionScheme.json"

},

{

"$ref": "statelessActionScheme.json"

}

]

}

},

"visualState": {

"type": "array",

"items": {

"$ref": "visualStateSchema.json"

},

"minItems": 1

}

},

"required": [

"name",

"interactions",

"position",

"visualState"

],

"additionalProperties": false

}

```

### Top-Level Properties[](#top-level-properties "Direct link to Top-Level Properties")

| Property | Type | Required | Description |

| -------------- | -------- | -------- | ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| `name` | `string` | **Yes** | The human-readable name of the device, which may be displayed to the player in the UI. |

| `id` | `string` | No | A unique machine-readable identifier for the device. This ID is crucial for linking the device to game logic, such as rules, events, or task objectives. It is highly recommended to provide this for any interactive device. |

| `position` | `object` | **Yes** | An object defining the device's placement and orientation in the game world. This property references an external schema. |

| `interactions` | `array` | **Yes** | An array of interaction objects that define how a player can engage with the device. Each element in the array must conform to one of the specified action schemas. |

| `visualState` | `array` | **Yes** | An array defining the different visual states the device can be in. This allows the device's appearance to change based on its internal state (e.g., "on" vs. "off"). There must be at least one visual state defined. |

### Property: Device Identification[](#property-device-identification "Direct link to Property: Device Identification")

Each device requires two identifiers: a human-readable `name` and a unique system `id`.

* `name`: The display name shown to users (e.g., "Deep Fryer", "Coffee Machine"). This field enhances usability and participant immersion by providing intuitive, natural-language names for each device.

* `id`: A unique system-wide identifier used for all internal referencing. The `id` is essential for:

* Rules: Referencing devices in preconditions and actions (See [Rule](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/rules.md)).

* Tasks: Specifying devices in goals and default properties (See [Task](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/tasks.md)).

* Game Logic: Managing internal device state and inter-device interactions (See [Interactions ](https://exmartlab.github.io/SHiNE-Framework/SHiNE-Framework/game-config/game_schema/interaction_types.md))

```

{

"name": "Deep Fryer",

"id": "deep_fryer",

// ... other properties

}

```

### Property: Position[](#property-position "Direct link to Property: Position")

The `position` property determines where a device appears on a wall and how it is displayed. It contains the following attributes that define its transform.

* `x`: The horizontal coordinate (in pixels) on the wall.

* `y`: The vertical coordinate (in pixels) on the wall.

* `scale`: A multiplier for the device's size (e.g., 1 for original size, 0.5 for half size).

* `origin`: Reference point for positioning (1 represents center)

Origin Property

The origin dictates which part of the device's image is placed at the (x, y) coordinates:

* origin: 0: Aligns the top-left corner of the image to the (x, y) point.

* origin: 1: Aligns the center of the image to the (x, y) point.

These positioning parameters are fully compatible with Phaser 3’s GameObject model, which underpins the simulation’s rendering layer. For more information:

* [Scale Documentation](https://docs.phaser.io/api-documentation/namespace/gameobjects-components-transform)